Hidden Algorithms, Hurting Kids? Why AI Literacy Matters?

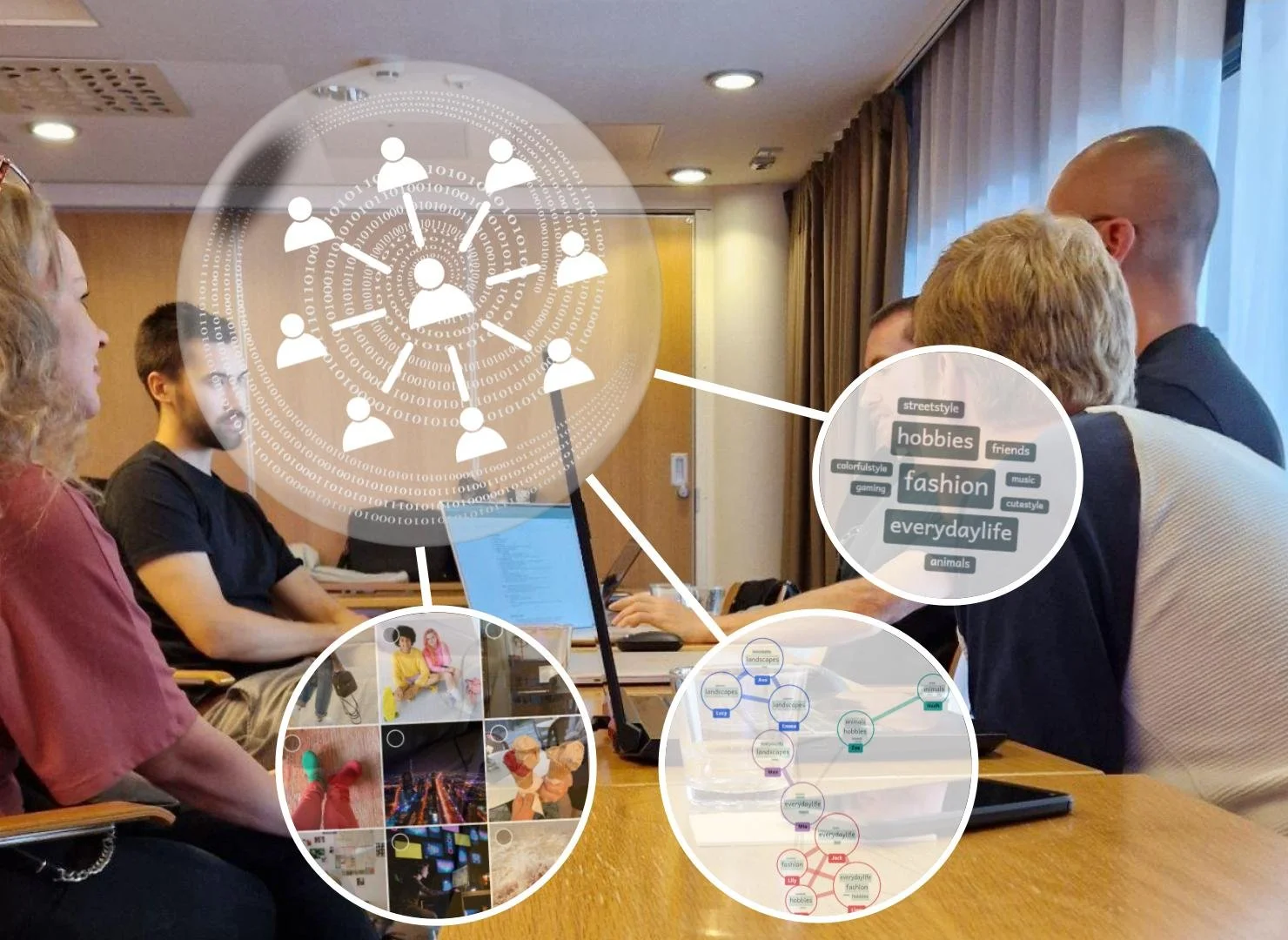

As part of the Generation AI research project, we attended in the project’s annual meeting last week. We discussed an brainstormed on AI awareness, AI ethics and how to keep our national resilience in rapidly changing digital world.

When most people think of artificial intelligence, they picture chatbots or quirky image generators. However the AI shaping our lives most profoundly is less visible: the algorithms that power social media. These systems operate around the clock, deciding what we see, read, and believe.

For young people especially, this raises a pressing question: are they safe in environments run by AI systems designed to maximize engagement rather than well-being?

How AI systems run social media

Platforms like YouTube and TikTok rely on algorithms that track user behavior and recommend content accordingly. On the surface, this personalization feels helpful. But over time, it narrows the range of content users encounter, creating echo chambers where the world seems to agree with them on everything.

The consequences are serious: reinforced biases and polarization, reduced critical thinking and addictive, endless content loops. What looks like harmless convenience can instead undermine healthy discourse, mental health, and even democratic values.

A broader challenge to our society

Parents often worry about the obvious dangers online: cyberbullying, predatory behavior, or harmful content. But it’s the less visible influence of recommendation algorithms that may be even more harmful. A Finnish study found that children exposed to misinformation just once a week were almost four times more likely to report frequent feelings of anxiety or depression (source). By shaping how children perceive reality itself, these algorithms don’t just undermine critical thinking but affect their mental well-being. In this sense, the algorithms behind social media are not just a safety issue; they represent a broader challenge to democratic society.

Building AI literacy

The way to solve this is education. By teaching students to recognize, question, and critically evaluate AI systems, we prepare them not just to use technology—but to live thoughtfully within it. AI literacy is not just about using AI. It’s about awareness, resilience, and responsibility in a world where algorithms increasingly shape how we see ourselves and one another.

Through the national Generation AI project, we are building classroom tools that help students and teachers demystify AI. One example is the Social Media Machine, a tool that shows how engagement-driven algorithms shape online experiences. Tested in classrooms and validated through research, it gives students a clearer view of the hidden systems around them.

More recently, the project has turned to demystifying chatbots like ChatGPT, ensuring students see these systems not as magical or all-knowing, but as human-made programs with clear limitations.

What you can do in your classroom now?

From preschool to high school, from urban innovation labs to rural schools, AI education is possible everywhere. Here’s what we’ve learned:

Make learning relevant and project based

Start early and keep it playful

Support your teachers with efficient training

If you want to bring AI learning to your school through teacher training, curriculum or student projects, we’re here to help.

Let’s connect. We’re happy to share more from our global work and support your journey with AI in education.

Contact

Kaisu Pallaskallio

CEO, Code School Finland

kaisu@codeschool.fi

+358 44 355 7355